Background

I’ve been interested in analysis of single case designs (SCD) using hierarchical models: each case is unique but we can improve estimation for each case by sharing data across cases. Given that data from SCD are often atypical, I’ve thought such data are a good candidate for ordinal regression.1

Broadly, we can model unidimensional2 continuous, count, ordinal data as ordinal, since these data are also ordinal. If we knew the exact distribution for the data, we could perform more efficient analyses, but we often don’t. Frank Harrell often recommends this kind of ordinal approach and provides several resources here. There are also useful papers: here3, here4, and here5, and I’ve applied to same idea to analysis of sum scores of Likert-response scales.6

Given this interest, I did some work on ordinal hierarchical models for analysis of SCD some time back – time stamp on my laptop says January 2022!! This work never left my laptop, and I mentioned it in passing to maybe two people. I thought of reaching out to James Pustejovsky to get his thoughts, but for some reason, I didn’t follow through.

Enter AERA 2024, I somehow found myself at a structured poster session for analysis of SCD. Very interesting work all around; count mixed models, model-based effect size estimation and benchmarks, single case mediation, … Made me want to share this again, and James P. encouraged me to send it his way. So here goes.

Description of hierarchical ordinal model

I focus on simple designs: AB, ABAB, …

I originally did this work with splines for approximating ordinal thresholds e.g.5, and splines for modeling case-specific trajectories. But I settled for something more conventional in the SCD literature.

The model assumes case-specific intercepts and treatment effects, and I use hierarchical modeling to estimate both. Additionally, the data within each case are autocorrelated.

First step is to transform the data to (dense) ranks.

Multivariate normal model for unobserved continuous data given observed ordinal data

If we assume ordinal data were originally normal data that have been discretized and we attempt to build a regression for the original normal data, we arrive at probit regression. Following Albert & Chib (1993)7, we can use this relation to model multivariate ordinal data with multivariate probit regression. This allows us set a correlation structure on the ordinal data at the latent level.

Model

Assume the latent data for case is a -dimensional vector, . are sampled as truncated variables given the constraints imposed by the ordered threshold-vector, . I represent the treatment indicator variable for case as , then the hierarchical ordinal model is:

where is intercept for case shrunken towards 0. Since we will estimate all ordinal thresholds, the grand-mean is 0. The case-specific treatment effect, , is shrunken towards , and is itself shrunken towards 0 (akin to a ridge regression penalty).

I set up the elements of the covariance matrix, , as:

such that is the autocorrelation coefficient. The prior on assumes a 95% chance falls in the interval – this sounds like a good choice.8 The diagonal elements of the matrix are fixed to 1 for the purpose of identifying the probit model.

Effect sizes

The ordinal probit model above is one for the cumulative distribution function (CDF) of the ranks. From there, we compute the probability mass function (PMF). Given the PMF, one can then apply several of the equations listed in the documentation of the SingleCaseES package to obtain effect sizes. I focus on the NAP, PEM and TAU (since it’s just NAP - 1). For effects defined on the original data scale like differences in means or medians, we can simply replace ranks with the original data to obtain results on the original data scale. We can obtain uncertainty intervals for all effects since we compute each effect size within each posterior iteration.

R code

I implemented the model above with Stan. The code is available as a gist file and contains two demonstrations. I review the demonstrations below.

| ### Block 01 -- Install required packages | |

| list_of_packages <- c( | |

| "SingleCaseES", "data.table", "ggplot2", "patchwork", | |

| "ggdist", "scales", "posterior", "styler" | |

| ) | |

| new_packages <- list_of_packages[ | |

| !(list_of_packages %in% installed.packages()[, "Package"]) | |

| ] | |

| if (length(new_packages)) install.packages(new_packages) | |

| rm(list_of_packages, new_packages) | |

| # install CmdStanR | |

| if (!"cmdstanr" %in% installed.packages()[, "Package"]) { | |

| # next install cmdstanr and CmdStan: | |

| install.packages( | |

| "cmdstanr", | |

| repos = c("https://mc-stan.org/r-packages/", getOption("repos")) | |

| ) | |

| } | |

| # the next piece of code tries to install CmdStan, skip it if you have it | |

| if (is.null(cmdstanr::cmdstan_version(error_on_NA = FALSE))) { | |

| cmdstanr::check_cmdstan_toolchain(fix = TRUE) | |

| cmdstanr::install_cmdstan( | |

| cores = max(c(1, round(parallel::detectCores() / 3))) | |

| ) | |

| } | |

| ### Block 02 -- Load packages | |

| styler::style_file("00_joined_example.R") | |

| library(data.table) | |

| library(ggplot2) | |

| library(ggdist) | |

| library(patchwork) | |

| library(scales) | |

| # tasky-example ---- | |

| # load required functions and objects | |

| source("create_ord_stan.R") | |

| dat <- fread("tasky_08.csv") | |

| dat[] | |

| # required variables: | |

| # create outcome variable as lower case | |

| dat[, outcome := count] | |

| # create case_id variable | |

| dat[, case_id := as.integer(factor(person))] | |

| # create time | |

| dat[, time_id := seq_len(.N), case_id] | |

| # create indicator for treatment | |

| dat[, treat := as.integer(phase == "B")] | |

| # create labels for each case | |

| dat[, case_label := person] | |

| dat[] | |

| ggplot(dat, aes(time_id, outcome)) + | |

| geom_line() + | |

| geom_point(aes(col = factor(treat))) + | |

| scale_color_manual(values = cb_palette) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| theme(legend.position = "top") | |

| ggsave("joined_tasky_01_raw.png", width = 6.5, height = 3) | |

| # set increase to 0 if improvement requires reduction | |

| dat_list <- create_dat_list(dat, increase = 1) | |

| dat_list | |

| # compile Stan model (only necessary first time) | |

| ord_ar <- cmdstanr::cmdstan_model("ordered_ssr.stan") | |

| # fit model with some default choices | |

| fit <- ord_ar$sample( | |

| data = dat_list, seed = 12345, iter_warmup = 1e3, iter_sampling = 1e3, | |

| chains = 3, parallel_chains = 3 | |

| ) | |

| # assess sampler problems | |

| fit$cmdstan_diagnose() | |

| # SD of treat-effect ("ridge" regularization), | |

| # random-effect SDs, treat-effect, | |

| # varying intercepts and treat-effect, | |

| # autocorrelation coefficient | |

| print(fit$summary(c( | |

| "sigma_te", "sigma_coefs", "treat_eff", "coefs", "ac" | |

| )), n = 50) | |

| # ordinal thresholds | |

| print(fit$summary("cutpoints"), n = 50) | |

| # effect sizes | |

| print(fit$summary(c( | |

| "mean_s", "mean_diff", "log_mean_ratio", | |

| "median_s", "median_diff", "log_median_ratio", | |

| "nap", "pem", "tau" | |

| )), n = 40) | |

| # posterior predictive checking | |

| # using ppc_bars since data are highly discrete | |

| bayesplot::ppc_bars( | |

| dat_list$y_ord, | |

| posterior::as_draws_matrix(fit$draws("ord_sim")) | |

| ) | |

| ggsave("joined_tasky_02_ppc.png", width = 6.5, height = 3) | |

| # assuming data have many levels, then use density overlays, | |

| # but not so reasonable here: | |

| bayesplot::ppc_dens_overlay( | |

| dat$outcome, | |

| posterior::as_draws_matrix(fit$draws("y_sim"))[sample(3000, 300), ] | |

| ) | |

| # predictions for each case-timepoint | |

| yhat_s <- fit$summary("y_hat") | |

| dat_merge <- cbind(dat, yhat_s[, c("median", "q5", "q95")]) | |

| setnames(dat_merge, c("median", "q5", "q95"), c("md", "lo", "hi")) | |

| dat_merge | |

| # predictions with 90% intervals | |

| # predictions are poor since we don't account for trend | |

| ggplot(dat_merge, aes(time_id, outcome)) + | |

| geom_point(aes(col = factor(treat))) + | |

| geom_line(linewidth = .125) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| geom_line(aes(y = md), linetype = 2) + | |

| theme(legend.position = "top") + | |

| scale_color_manual(values = cb_palette) + | |

| geom_ribbon(aes(ymin = lo, ymax = hi), alpha = .2) | |

| # posterior predictive distribution for each case-timepoint on ordinal scale | |

| ysim_s <- fit$summary("ord_sim") | |

| dat_sims <- cbind(dat, ysim_s[, c("median", "q5", "q95")]) | |

| setnames(dat_sims, c("median", "q5", "q95"), c("md", "lo", "hi")) | |

| dat_sims[] | |

| # create ranked version of outcome | |

| dat_sims[, ord_outcome := frank(outcome, ties.method = "dense")] | |

| # distribution with 90% intervals | |

| ggplot(dat_sims, aes(time_id, ord_outcome)) + | |

| geom_point(aes(col = factor(treat))) + | |

| geom_line(linewidth = .125) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| geom_line(aes(y = md), linetype = 2) + | |

| theme(legend.position = "top") + | |

| scale_color_manual(values = cb_palette) + | |

| geom_ribbon(aes(ymin = lo, ymax = hi), alpha = .2) + | |

| labs(y = "Ranked outcome") | |

| ggsave("joined_tasky_03_ppd_90.png", width = 6.5, height = 3) | |

| # create effect size plot | |

| # case aggregated data | |

| (gm_count <- dat[ | |

| , .N, list(case_label, case_id, x = treat + 1) | |

| ][order(case_id, x)]) | |

| tmp_gm <- gm_count[, .(x = sum(x), N = sum(N)), list(case_label, case_id)] | |

| tmp_gm[] | |

| (gmean_s <- fit$summary( | |

| c( | |

| "mean_s", "mean_diff", "log_mean_ratio", | |

| "median_s", "median_diff", "log_median_ratio", | |

| "nap", "pem", "tau" | |

| ), | |

| ~ quantile(.x, probs = c(.025, .1, .5, .9, .975)), | |

| posterior::default_convergence_measures() | |

| )[, c("2.5%", "10%", "50%", "90%", "97.5%", "rhat", "ess_bulk", "ess_tail")]) | |

| gmean_sum <- as.data.table(cbind( | |

| # row-bind: case-time counts, case-counts and repeated in order above | |

| # to column-join join with effect sizes table | |

| rbindlist( | |

| list( | |

| gm_count, tmp_gm, tmp_gm, | |

| gm_count, tmp_gm, tmp_gm, | |

| tmp_gm, tmp_gm, tmp_gm | |

| ), | |

| idcol = "metric" | |

| ), | |

| gmean_s | |

| )) | |

| gmean_sum[] # metric column contains id | |

| gmean_sum[, metric_t := c( | |

| "means", "mean diff", "log(mean ratio)", | |

| "medians", "median diff", "log(median ratio)", | |

| "NAP", "PEM", "TAU" | |

| )[metric]] | |

| # label phases | |

| gmean_sum[, x_lab := factor(c("A", "B", "B > A")[x], c("A", "B", "B > A"))] | |

| # set null conditions for effect sizes | |

| gmean_sum[, null := c(NA, 0, 0, NA, 0, 0, .5, .5, 0)[metric]] | |

| gmean_sum[, diff := grepl("means|medians", metric_t)] | |

| gmean_sum[] | |

| ggplot(gmean_sum, aes(reorder(case_label, -case_id), y = , col = x_lab)) + | |

| # Pointrange w/ 95% interval | |

| geom_pointrange( | |

| aes(ymin = , ymax = ), | |

| shape = 4, position = position_dodge(.5) | |

| ) + | |

| # Bar w/ 80% interval | |

| geom_linerange( | |

| aes(ymin = , ymax = ), | |

| alpha = .25, linewidth = 3, | |

| position = position_dodge(.5) | |

| ) + | |

| # Null conditions | |

| geom_hline(aes(yintercept = null), linetype = 2, alpha = .125) + | |

| geom_text( | |

| aes(label = number(, .01)), | |

| position = position_dodge(.5), vjust = -.7, | |

| size = 3, data = gmean_sum[diff == FALSE] | |

| ) + | |

| coord_flip() + | |

| gen_theme + | |

| scale_color_manual(values = cb_palette[c(2, 3, 1)], name = "") + | |

| facet_wrap(~ reorder(metric_t, metric), scales = "free_x", nrow = 3) | |

| ggsave("joined_tasky_04_ord_es_80_95.png", width = 6.5, height = 4.5) | |

| # compile ordinal effects based on simple descriptives | |

| frq_dt <- rbindlist(list( | |

| dat[, SingleCaseES::NAP( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label], | |

| dat[, SingleCaseES::PEM( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label], | |

| dat[, SingleCaseES::LRRi( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label], | |

| dat[, SingleCaseES::LRM( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label] | |

| ), fill = TRUE) | |

| frq_dt[] | |

| # compile ordinal model effects | |

| ord_es_dt <- rbindlist( | |

| lapply(c("nap", "pem", "log_mean_ratio", "log_median_ratio"), function(es) { | |

| extract_es( | |

| fit, es, dat[order(case_id), .N, list(case_label)]$case_label, .95 | |

| ) | |

| }) | |

| ) | |

| ord_es_dt[] | |

| joined_es_dt <- rbindlist( | |

| list(frq_dt, ord_es_dt), | |

| fill = TRUE, idcol = "approach" | |

| ) | |

| joined_es_dt[] | |

| joined_es_dt[, approach_t := factor( | |

| approach, 2:1, | |

| c("Bayesian Ordinal (hierarchical)", "Sample statistics (non-hierarchical)") | |

| )] | |

| joined_es_dt[] | |

| joined_es_dt[grepl("MEAN_RA", ES), ES := "LRRi"] | |

| joined_es_dt[grepl("MEDIAN_RA", ES), ES := "LRM"] | |

| joined_es_dt[] | |

| joined_es_dt[, .N, ES] | |

| joined_es_dt[, null := fcase( | |

| ES %in% c("NAP", "PEM"), .5, | |

| grepl("LR", ES), 0 | |

| )] | |

| pt_dodge <- position_dodge(width = .8) | |

| ggplot(joined_es_dt, aes( | |

| reorder(case_label, -as.integer(factor(case_label))), | |

| Est, | |

| ymin = CI_lower, ymax = CI_upper, group = approach_t, | |

| shape = approach_t, colour = approach_t, label = percent(Est, 1) | |

| )) + | |

| geom_text(position = pt_dodge, vjust = -.5, size = 2.5) + | |

| geom_point(position = pt_dodge) + | |

| geom_linerange(position = pt_dodge) + | |

| geom_hline(aes(yintercept = null), linetype = 2) + | |

| scale_color_manual(values = cb_palette[2:1]) + | |

| scale_shape_manual(values = c(4, 1)) + | |

| scale_y_continuous(labels = percent_format()) + | |

| facet_wrap( | |

| ~ factor( | |

| ES, c("NAP", "PEM", "LRRi", "LRM"), | |

| paste0(c("NAP", "PEM", "LRRi", "LRM"), " w/ 95% CI") | |

| ), | |

| scales = "free_x" | |

| ) + | |

| coord_flip() + | |

| guides( | |

| col = guide_legend(reverse = TRUE), shape = guide_legend(reverse = TRUE) | |

| ) + | |

| gen_theme + | |

| labs(col = "", shape = "") | |

| ggsave("joined_tasky_05_ord_es_comp_95.png", width = 6.5, height = 4) | |

| # wipe environment & free memory | |

| rm(list = ls()) | |

| gc() | |

| # schmidt-example ---- | |

| # load required functions and objects | |

| source("create_ord_stan.R") | |

| dat_full <- as.data.table(SingleCaseES::Schmidt2012) | |

| dat_full[] | |

| # required variables: | |

| # create outcome variable as lower case | |

| dat_full[, outcome := Outcome] | |

| # create case_id variable | |

| dat_full[, case_id := as.integer(factor(Case))] | |

| # create time | |

| dat_full[, time_id := Session_num, case_id] | |

| # create indicator for treatment | |

| dat_full[, treat := Trt] | |

| # create labels for each case | |

| dat_full[, case_label := Case] | |

| dat_full[] | |

| head(dat_full[Behavior == "Conversation"]) | |

| head(dat_full[Behavior == "Initiations"]) | |

| head(dat_full[Behavior == "Responses"]) | |

| dat <- dat_full[Behavior == "Conversation"] | |

| dat[] | |

| ggplot(dat, aes(time_id, outcome)) + | |

| geom_line() + | |

| geom_point(aes(col = factor(treat))) + | |

| scale_color_manual(values = cb_palette) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| theme(legend.position = "top") | |

| ggsave("joined_schmidt_01_raw.png", width = 6.5, height = 3) | |

| # set increase to 0 if improvement requires reduction | |

| dat_list <- create_dat_list(dat, increase = 1) | |

| dat_list | |

| # compile Stan model (only necessary first time) | |

| ord_ar <- cmdstanr::cmdstan_model("ordered_ssr.stan") | |

| # fit model with some default choices | |

| fit <- ord_ar$sample( | |

| data = dat_list, seed = 12345, iter_warmup = 1e3, iter_sampling = 1e3, | |

| chains = 3, parallel_chains = 3 | |

| ) | |

| # assess sampler problems | |

| fit$cmdstan_diagnose() | |

| # SD of treat-effect ("ridge" regularization), | |

| # random-effect SDs, treat-effect, | |

| # varying intercepts and treat-effect, | |

| # autocorrelation coefficient | |

| print(fit$summary(c( | |

| "sigma_te", "sigma_coefs", "treat_eff", "coefs", "ac" | |

| )), n = 50) | |

| # ordinal thresholds | |

| print(fit$summary("cutpoints"), n = 50) | |

| # effect sizes | |

| print(fit$summary(c( | |

| "mean_s", "mean_diff", "log_mean_ratio", | |

| "median_s", "median_diff", "log_median_ratio", | |

| "nap", "pem", "tau" | |

| )), n = 40) | |

| # posterior predictive checking | |

| bayesplot::ppc_dens_overlay( | |

| dat$outcome, | |

| posterior::as_draws_matrix(fit$draws("y_sim"))[sample(3000, 300), ] | |

| ) | |

| ggsave("joined_schmidt_02_ppc.png", width = 6.5, height = 3) | |

| # predictions for each case-timepoint | |

| yhat_s <- fit$summary("y_hat") | |

| dat_merge <- cbind(dat, yhat_s[, c("median", "q5", "q95")]) | |

| setnames(dat_merge, c("median", "q5", "q95"), c("md", "lo", "hi")) | |

| dat_merge | |

| # predictions with 90% intervals | |

| # predictions are poor since we don't account for trend | |

| ggplot(dat_merge, aes(time_id, outcome)) + | |

| geom_point(aes(col = factor(treat))) + | |

| geom_line(linewidth = .125) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| geom_line(aes(y = md), linetype = 2) + | |

| theme(legend.position = "top") + | |

| scale_color_manual(values = cb_palette) + | |

| geom_ribbon(aes(ymin = lo, ymax = hi), alpha = .2) | |

| # posterior predictive distribution for each case-timepoint on ordinal scale | |

| ysim_s <- fit$summary("ord_sim") | |

| dat_sims <- cbind(dat, ysim_s[, c("median", "q5", "q95")]) | |

| setnames(dat_sims, c("median", "q5", "q95"), c("md", "lo", "hi")) | |

| dat_sims[] | |

| # create ranked version of outcome | |

| dat_sims[, ord_outcome := frank(outcome, ties.method = "dense")] | |

| # distribution with 90% intervals | |

| ggplot(dat_sims, aes(time_id, ord_outcome)) + | |

| geom_point(aes(col = factor(treat))) + | |

| geom_line(linewidth = .125) + | |

| facet_wrap(~case_label, ncol = 2) + | |

| theme_bw() + | |

| geom_line(aes(y = md), linetype = 2) + | |

| theme(legend.position = "top") + | |

| scale_color_manual(values = cb_palette) + | |

| geom_ribbon(aes(ymin = lo, ymax = hi), alpha = .2) + | |

| labs(y = "Ranked outcome") | |

| ggsave("joined_schmidt_03_ppd_90.png", width = 6.5, height = 3) | |

| # create effect size plot | |

| # case aggregated data | |

| (gm_count <- dat[ | |

| , .N, list(case_label, case_id, x = treat + 1) | |

| ][order(case_id, x)]) | |

| tmp_gm <- gm_count[, .(x = sum(x), N = sum(N)), list(case_label, case_id)] | |

| tmp_gm[] | |

| (gmean_s <- fit$summary( | |

| c( | |

| "mean_s", "mean_diff", | |

| "median_s", "median_diff", | |

| "nap", "pem", "tau" | |

| ), | |

| ~ quantile(.x, probs = c(.025, .1, .5, .9, .975)), | |

| posterior::default_convergence_measures() | |

| )[, c("2.5%", "10%", "50%", "90%", "97.5%", "rhat", "ess_bulk", "ess_tail")]) | |

| gmean_sum <- as.data.table(cbind( | |

| # row-bind: case-time counts, case-counts and repeated in order above | |

| # to column-join join with effect sizes table | |

| rbindlist( | |

| list( | |

| gm_count, tmp_gm, | |

| gm_count, tmp_gm, | |

| tmp_gm, tmp_gm, tmp_gm | |

| ), | |

| idcol = "metric" | |

| ), | |

| gmean_s | |

| )) | |

| gmean_sum[] # metric column contains id | |

| gmean_sum[, metric_t := c( | |

| "means", "mean diff", | |

| "medians", "median diff", | |

| "NAP", "PEM", "TAU" | |

| )[metric]] | |

| # label phases | |

| gmean_sum[, x_lab := factor(c("A", "B", "B > A")[x], c("A", "B", "B > A"))] | |

| # set null conditions for effect sizes | |

| gmean_sum[, null := c(NA, 0, NA, 0, .5, .5, 0)[metric]] | |

| gmean_sum[, diff := grepl("means|medians", metric_t)] | |

| gmean_sum[] | |

| ggplot(gmean_sum, aes(reorder(case_label, -case_id), y = , col = x_lab)) + | |

| # Pointrange w/ 95% interval | |

| geom_pointrange( | |

| aes(ymin = , ymax = ), | |

| shape = 4, position = position_dodge(.5) | |

| ) + | |

| # Bar w/ 80% interval | |

| geom_linerange( | |

| aes(ymin = , ymax = ), | |

| alpha = .25, linewidth = 3, | |

| position = position_dodge(.5) | |

| ) + | |

| # Null conditions | |

| geom_hline(aes(yintercept = null), linetype = 2, alpha = .125) + | |

| geom_text( | |

| aes(label = number(, .01)), | |

| position = position_dodge(.5), vjust = -.7, | |

| size = 3, data = gmean_sum[diff == FALSE] | |

| ) + | |

| coord_flip() + | |

| gen_theme + | |

| scale_color_manual(values = cb_palette[c(2, 3, 1)], name = "") + | |

| facet_wrap(~ reorder(metric_t, metric), scales = "free_x", nrow = 3) | |

| ggsave("joined_schmidt_04_ord_es_80_95.png", width = 6.5, height = 4.5) | |

| # compile ordinal effects based on simple descriptives | |

| frq_dt <- rbindlist(list( | |

| dat[, SingleCaseES::NAP( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label], | |

| dat[, SingleCaseES::PEM( | |

| outcome[treat == 0], outcome[treat == 1] | |

| ), case_label] | |

| ), fill = TRUE) | |

| frq_dt[] | |

| # compile ordinal model effects | |

| ord_es_dt <- rbindlist( | |

| lapply(c("nap", "pem"), function(es) { | |

| extract_es( | |

| fit, es, dat[order(case_id), .N, list(case_label)]$case_label, .95 | |

| ) | |

| }) | |

| ) | |

| ord_es_dt[] | |

| joined_es_dt <- rbindlist( | |

| list(frq_dt, ord_es_dt), | |

| fill = TRUE, idcol = "approach" | |

| ) | |

| joined_es_dt[] | |

| joined_es_dt[, approach_t := factor( | |

| approach, 2:1, | |

| c("Bayesian Ordinal (hierarchical)", "Sample statistics (non-hierarchical)") | |

| )] | |

| joined_es_dt[] | |

| joined_es_dt[grepl("MEAN_RA", ES), ES := "LRRi"] | |

| joined_es_dt[grepl("MEDIAN_RA", ES), ES := "LRM"] | |

| joined_es_dt[] | |

| joined_es_dt[, .N, ES] | |

| joined_es_dt[, null := fcase( | |

| ES %in% c("NAP", "PEM"), .5, | |

| grepl("LR", ES), 0 | |

| )] | |

| pt_dodge <- position_dodge(width = .8) | |

| ggplot(joined_es_dt, aes( | |

| reorder(case_label, -as.integer(factor(case_label))), | |

| Est, | |

| ymin = CI_lower, ymax = CI_upper, group = approach_t, | |

| shape = approach_t, colour = approach_t, label = percent(Est, 1) | |

| )) + | |

| geom_text(position = pt_dodge, vjust = -.5, size = 2.5) + | |

| geom_point(position = pt_dodge) + | |

| geom_linerange(position = pt_dodge) + | |

| geom_hline(aes(yintercept = null), linetype = 2) + | |

| scale_color_manual(values = cb_palette[2:1]) + | |

| scale_shape_manual(values = c(4, 1)) + | |

| scale_y_continuous(labels = percent_format()) + | |

| facet_wrap( | |

| ~ factor( | |

| ES, c("NAP", "PEM", "LRRi", "LRM"), | |

| paste0(c("NAP", "PEM", "LRRi", "LRM"), " w/ 95% CI") | |

| ), | |

| scales = "free_x" | |

| ) + | |

| coord_flip() + | |

| guides( | |

| col = guide_legend(reverse = TRUE), shape = guide_legend(reverse = TRUE) | |

| ) + | |

| gen_theme + | |

| labs(col = "", shape = "") | |

| ggsave("joined_schmidt_05_ord_es_comp_95.png", width = 6.5, height = 2.5) | |

| # wipe environment & free memory | |

| rm(list = ls()) | |

| gc() |

| cb_palette <- c( | |

| "#999999", "#E69F00", "#56B4E9", "#009E73", "#F0E442", "#0072B2", | |

| "#D55E00", "#CC79A7" | |

| ) | |

| extract_es <- function(fit, es, case_labels, confidence = .95) { | |

| stopifnot(length(es) == 1) | |

| stopifnot(es %in% c( | |

| "nap", "mean_diff", "median_diff", "log_mean_ratio", | |

| "log_median_ratio", "nap", "tau", "pem" | |

| )) | |

| lo <- (1 - confidence) / 2 | |

| ret <- data.table::as.data.table(fit$summary( | |

| es, | |

| ~ quantile(.x, c(.5, lo, 1 - lo)), sd, | |

| posterior::default_convergence_measures() | |

| )) | |

| ret$case_label <- case_labels | |

| ret$ES <- toupper(es) | |

| data.table::setnames( | |

| ret, c("50%", "sd", paste0(100 * c(lo, 1 - lo), "%")), | |

| c("Est", "SE", "CI_lower", "CI_upper") | |

| ) | |

| ret <- ret[, c( | |

| "case_label", "ES", "Est", "SE", "CI_lower", "CI_upper", "rhat", "ess_bulk" | |

| )] | |

| return(ret) | |

| } | |

| gen_theme <- theme_bw() + | |

| theme( | |

| legend.position = "top", strip.background = element_blank(), | |

| axis.title = element_blank(), panel.border = element_blank(), | |

| panel.spacing.x = unit(.5, "cm"), panel.grid.major.y = element_blank(), | |

| axis.ticks.y = element_blank(), axis.line.x = element_line(linewidth = .25) | |

| ) | |

| create_dat_list <- function(dt, increase = 1) { | |

| dl <- list( | |

| N = nrow(dt), # number of rows of data | |

| n_case = max(dt$case_id), # number of cases | |

| n_time = max(dt$time_id), # maximum number of timepoints | |

| case_id = dt$case_id, # case ID variable (integers) | |

| time_id = dt$time_id, # time variable (can be continuous) | |

| y_s = unique(sort(dt$outcome)), # unique data points in outcome | |

| y_ord = as.integer(ordered(dt$outcome)), # outcome transformed to ranks | |

| treat = dt$treat # treatment phase indicator | |

| ) | |

| # count of each unique values | |

| dl$count_levs <- as.integer(table(dl$y_ord)) | |

| # number of unique values | |

| dl$n_ord <- length(dl$count_levs) | |

| (gm_count <- dt[ | |

| , .N, list(case_label, case_id, x = treat + 1) | |

| ][order(case_id, x)]) | |

| dl$Ncount <- nrow(gm_count) # number of case-phases | |

| dl$count <- gm_count$N # number of data points computed in gm_count | |

| dl$increase <- increase # set to 0 if improvement requires reduction | |

| return(dl) | |

| } |

| // auto-generated ordinal regression with auto-correlation | |

| functions { | |

| matrix create_autoreg_mat (real corr, int time) { | |

| matrix[time, time] autoreg_mat = identity_matrix(time); | |

| for (i in 2:time) { | |

| for (j in 1:(i - 1)) { | |

| autoreg_mat[i, j] = pow(corr, abs(i - j)); | |

| autoreg_mat[j, i] = autoreg_mat[i, j]; | |

| } | |

| } | |

| return(autoreg_mat); | |

| } | |

| } | |

| data { | |

| int N; | |

| int n_case; | |

| int n_time; | |

| int<lower = 2> n_ord; | |

| array[N] int<lower = 1, upper = n_case> case_id; | |

| array[N] int<lower = 1, upper = n_ord> y_ord; | |

| array[N] int<lower = 1, upper = n_time> time_id; | |

| array[N] int treat; | |

| array[n_ord] int count_levs; | |

| vector[n_ord] y_s; | |

| int Ncount; | |

| vector[Ncount] count; | |

| int<lower = 0, upper = 1> increase; | |

| } | |

| transformed data { | |

| array[n_case, n_time] int outcome_ord = rep_array(0, n_case, n_time); | |

| array[n_case, n_time] int treat_arr = rep_array(0, n_case, n_time); | |

| array[n_case, n_time] int idx_01 = rep_array(0, n_case, n_time); | |

| for (i in 1:N) { | |

| outcome_ord[case_id[i], time_id[i]] = y_ord[i]; | |

| treat_arr[case_id[i], time_id[i]] = treat[i]; | |

| } | |

| for (i in 1:n_case) { | |

| for (j in 1:n_time) { | |

| if (outcome_ord[i, j] >= 1 && outcome_ord[i, j] <= n_ord) { | |

| idx_01[i, j] = 1; | |

| } | |

| } | |

| } | |

| array[n_case] int nm_count; | |

| for (i in 1:n_case) { | |

| nm_count[i] = sum(idx_01[i, ]); | |

| } | |

| array[n_case, n_time] int true_idxs = rep_array(0, n_case, n_time); | |

| for (i in 1:n_case) { | |

| int row_idx = 0; | |

| for (j in 1:n_time) { | |

| if (idx_01[i, j] == 1) { | |

| row_idx += 1; | |

| true_idxs[i, row_idx] = j; | |

| } | |

| } | |

| } | |

| int n_ord_gt_2 = n_ord > 2 ? 1 : 0; | |

| int count_others = n_ord > 2 ? sum(count_levs[2:(n_ord - 1)]) : 0; | |

| } | |

| parameters { | |

| real<lower = 0> sigma_te; | |

| vector<lower = 0>[2] sigma_coefs; | |

| real treat_eff; | |

| matrix[n_case, 2] coefs_base; | |

| real<lower = 0, upper = 1> phi_01; | |

| ordered[n_ord - 1] cutpoints; | |

| vector<upper = cutpoints[1]>[count_levs[1]] z_first; | |

| vector<lower = cutpoints[n_ord - 1]>[count_levs[n_ord]] z_last; | |

| vector<lower = 0.0, upper = 1.0>[count_others] z_inter; | |

| } | |

| model { | |

| cutpoints ~ student_t(3, 0, 1); | |

| sigma_te ~ student_t(3, 0, 1); | |

| treat_eff ~ normal(0, sigma_te); | |

| phi_01 ~ beta(5, 5); | |

| sigma_coefs ~ student_t(3, 0, 1); | |

| to_vector(coefs_base) ~ std_normal(); | |

| { | |

| matrix[n_case, n_time] mu; | |

| matrix[n_time, n_time] autoreg_mat = create_autoreg_mat( | |

| phi_01 * 2 - 1.0, n_time | |

| ); | |

| matrix[n_case, 2] coefs; | |

| matrix[n_case, n_time] z; | |

| array[2 + n_ord_gt_2] int pos_levs = rep_array(0, 2 + n_ord_gt_2); | |

| coefs[, 1] = sigma_coefs[1] * coefs_base[, 1]; | |

| coefs[, 2] = treat_eff + sigma_coefs[2] * coefs_base[, 2]; | |

| for (i in 1:n_case) { | |

| array[nm_count[i]] int row_idxs = true_idxs[i, 1:nm_count[i]]; | |

| mu[i, ] = coefs[i, 1] + coefs[i, 2] * to_row_vector(treat_arr[i, ]); | |

| for (j in 1:n_time) { | |

| int curr_idx = outcome_ord[i, j]; | |

| if (curr_idx == 1) { | |

| pos_levs[1] += 1; | |

| z[i, j] = z_first[pos_levs[1]]; | |

| } else if (curr_idx > 0) { | |

| if (curr_idx == n_ord) { | |

| pos_levs[2] += 1; | |

| z[i, j] = z_last[pos_levs[2]]; | |

| } else { | |

| real b_min_a = cutpoints[curr_idx] - cutpoints[curr_idx - 1]; | |

| // rescale 0-1 to cutpoints scale | |

| pos_levs[3] += 1; | |

| z[i, j] = b_min_a * z_inter[pos_levs[3]] + cutpoints[curr_idx - 1]; | |

| // jacobian adjustment | |

| target += log(abs(b_min_a)); | |

| } | |

| } | |

| } | |

| z[i, row_idxs] ~ multi_normal_cholesky( | |

| mu[i, row_idxs], | |

| cholesky_decompose(autoreg_mat[row_idxs, row_idxs]) | |

| ); | |

| } | |

| } | |

| } | |

| generated quantities { | |

| real ac = phi_01 * 2 - 1.0; | |

| matrix[n_case, 2] coefs; | |

| vector[n_case * 2] mean_s; | |

| vector[n_case * 2] median_s; | |

| vector[n_case] mean_diff; | |

| vector[n_case] median_diff; | |

| vector[n_case] log_mean_ratio; | |

| vector[n_case] log_median_ratio; | |

| vector[n_case] nap; | |

| vector[n_case] tau; | |

| vector[n_case] pem; | |

| vector[N] y_hat; | |

| vector[N] y_sim; | |

| vector[N] log_lik; | |

| array[N] int ord_sim; | |

| coefs[, 1] = sigma_coefs[1] * coefs_base[, 1]; | |

| coefs[, 2] = treat_eff + sigma_coefs[2] * coefs_base[, 2]; | |

| { | |

| matrix[n_case, n_time] mu; | |

| matrix[n_time, n_time] autoreg_mat = create_autoreg_mat( | |

| phi_01 * 2 - 1.0, n_time | |

| ); | |

| matrix[n_ord, n_case * 2] pmf_mat = rep_matrix(0.0, n_ord, n_case * 2); | |

| vector[n_ord] pmf_vec; | |

| vector[n_ord - 1] p_prob; | |

| int mat_col_id; | |

| int col_id; | |

| vector[n_ord] cdf = rep_vector(0.0, n_ord); | |

| vector[n_ord] d0_vec; | |

| vector[n_ord] d1_vec; | |

| vector[n_ord] ccd1_vec; | |

| for (i in 1:N) { | |

| mat_col_id = (case_id[i] - 1) * 2 + treat[i] + 1; | |

| mu[case_id[i], time_id[i]] = | |

| coefs[case_id[i], 1] + coefs[case_id[i], 2] * treat[i]; | |

| p_prob = Phi(cutpoints - mu[case_id[i], time_id[i]]); | |

| for (j in 1:(n_ord - 1)) { | |

| if (j == 1) { | |

| pmf_vec[j] = p_prob[j]; | |

| } else { | |

| pmf_vec[j] = p_prob[j] - p_prob[j - 1]; | |

| } | |

| } | |

| pmf_vec[n_ord] = 1 - p_prob[n_ord - 1]; | |

| y_hat[i] = sum(pmf_vec .* y_s); | |

| ord_sim[i] = ordered_probit_rng(mu[case_id[i], time_id[i]], cutpoints); | |

| log_lik[i] = ordered_probit_lpmf( | |

| y_ord[i] | mu[case_id[i], time_id[i]], cutpoints | |

| ); | |

| y_sim[i] = y_s[ord_sim[i]]; | |

| pmf_mat[, mat_col_id] = pmf_mat[, mat_col_id] + pmf_vec; | |

| } | |

| for (i in 1:n_case) { | |

| real med_a = 0; | |

| real med_b = 0; | |

| for (j in 1:2) { | |

| col_id = (i - 1) * 2 + j; | |

| pmf_mat[, col_id] = pmf_mat[, col_id] / count[col_id]; | |

| mean_s[col_id] = sum(pmf_mat[, col_id] .* y_s); | |

| cdf = cumulative_sum(pmf_mat[, col_id]); | |

| if (cdf[1] >= .5) { | |

| median_s[col_id] = y_s[1]; | |

| } else { | |

| for (k in 2:n_ord) { | |

| if (cdf[k] == .5) { | |

| median_s[col_id] = y_s[k]; | |

| } else if (cdf[k - 1] < .5 && cdf[k] > .5) { | |

| median_s[col_id] = y_s[k - 1] + (.5 - cdf[k - 1]) * | |

| (y_s[k] - y_s[k - 1]) / (cdf[k] - cdf[k - 1]); | |

| } | |

| } | |

| } | |

| } | |

| mean_diff[i] = mean_s[col_id] - mean_s[col_id - 1]; | |

| median_diff[i] = median_s[col_id] - median_s[col_id - 1]; | |

| log_mean_ratio[i] = log(mean_s[col_id]) - log(mean_s[col_id - 1]); | |

| log_median_ratio[i] = log(median_s[col_id]) - log(median_s[col_id - 1]); | |

| d0_vec = pmf_mat[, col_id - 1]; | |

| d1_vec = pmf_mat[, col_id]; | |

| ccd1_vec = 1.0 - cumulative_sum(d1_vec); | |

| nap[i] = sum(d0_vec .* ccd1_vec + .5 * d0_vec .* d1_vec); | |

| tau[i] = nap[i] * 2.0 - 1.0; | |

| for (k in 1:n_ord) { | |

| if (median_s[col_id - 1] == y_s[k]) { | |

| med_a = .5 * pmf_mat[k, col_id]; | |

| } | |

| if (median_s[col_id - 1] < y_s[k]) { | |

| med_b += pmf_mat[k, col_id]; | |

| } | |

| } | |

| pem[i] = med_a + med_b; | |

| } | |

| } | |

| if (increase == 0) { | |

| mean_diff = -mean_diff; | |

| median_diff = -median_diff; | |

| log_mean_ratio = -log_mean_ratio; | |

| log_median_ratio = -log_median_ratio; | |

| nap = 1.0 - nap; | |

| tau = -tau; | |

| pem = 1.0 - pem; | |

| } | |

| } |

| person | phase | count | |

|---|---|---|---|

| rebeccah | A | 4 | |

| rebeccah | A | 2 | |

| rebeccah | A | 2 | |

| rebeccah | A | 0 | |

| rebeccah | A | 2 | |

| rebeccah | A | 0 | |

| rebeccah | B | 6 | |

| rebeccah | B | 4 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| rebeccah | A_p | 1 | |

| rebeccah | A_p | 4 | |

| rebeccah | A_p | 5 | |

| rebeccah | A_p | 0 | |

| rebeccah | A_p | 0 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| rebeccah | B | 6 | |

| cara | A | 2 | |

| cara | A | 6 | |

| cara | A | 4 | |

| cara | A | 2 | |

| cara | A | 1 | |

| cara | A | 5 | |

| cara | B | 0 | |

| cara | B | 6 | |

| cara | B | 2 | |

| cara | B | 3 | |

| cara | B | 6 | |

| cara | B | 4 | |

| cara | B | 4 | |

| cara | A_p | 0 | |

| cara | A_p | 6 | |

| cara | A_p | 1 | |

| cara | A_p | 1 | |

| cara | A_p | 2 | |

| cara | A_p | 0 | |

| cara | B | 5 | |

| cara | B | 4 | |

| cara | B | 5 | |

| cara | B | 4 | |

| cara | B | 5 | |

| amber | A | 6 | |

| amber | A | 6 | |

| amber | A | 6 | |

| amber | A | 5 | |

| amber | A | 0 | |

| amber | A | 6 | |

| amber | A | 0 | |

| amber | A | 0 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | A_p | 3 | |

| amber | A_p | 4 | |

| amber | A_p | 4 | |

| amber | A_p | 2 | |

| amber | A_p | 0 | |

| amber | A_p | 0 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 | |

| amber | B | 6 |

Demonstrations

Tasky (2008)9

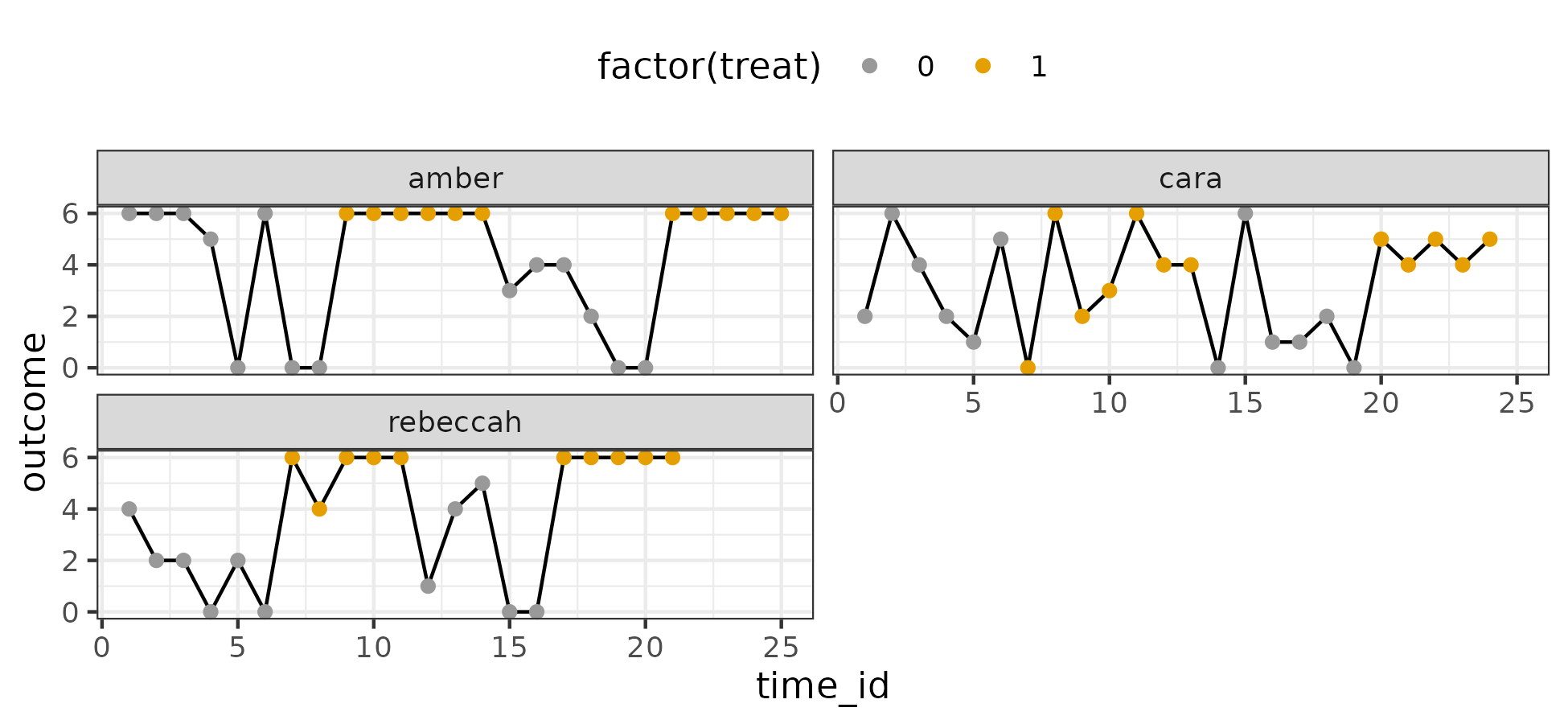

I visualize the data below:

There are three cases. The outcome is a count variable with 7 unique values – easy to assume an ordinal model. Higher values are desirable.

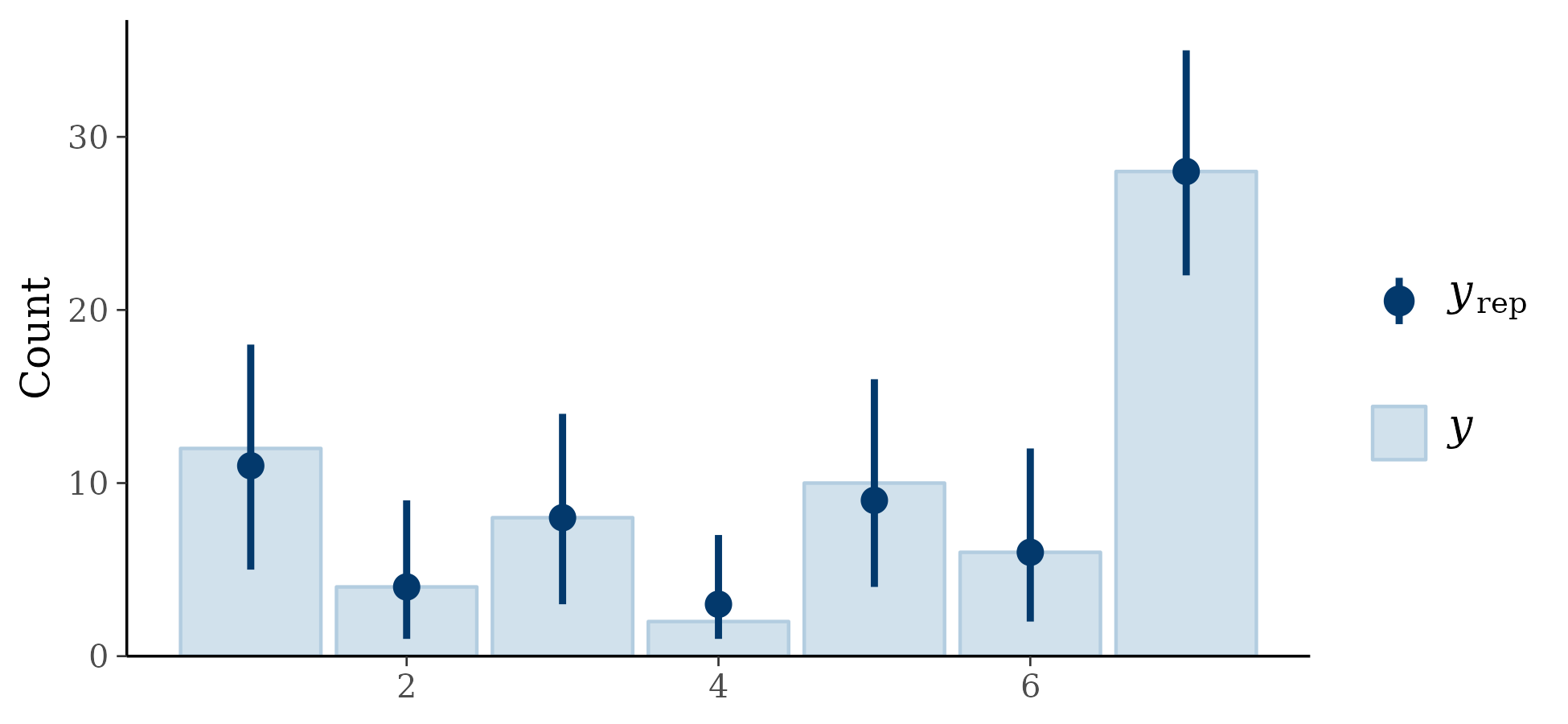

I fit the model, parameters appeared to converge without significant problems. For posterior predictive checking, I assessed the proportion for each count (95% intervals):

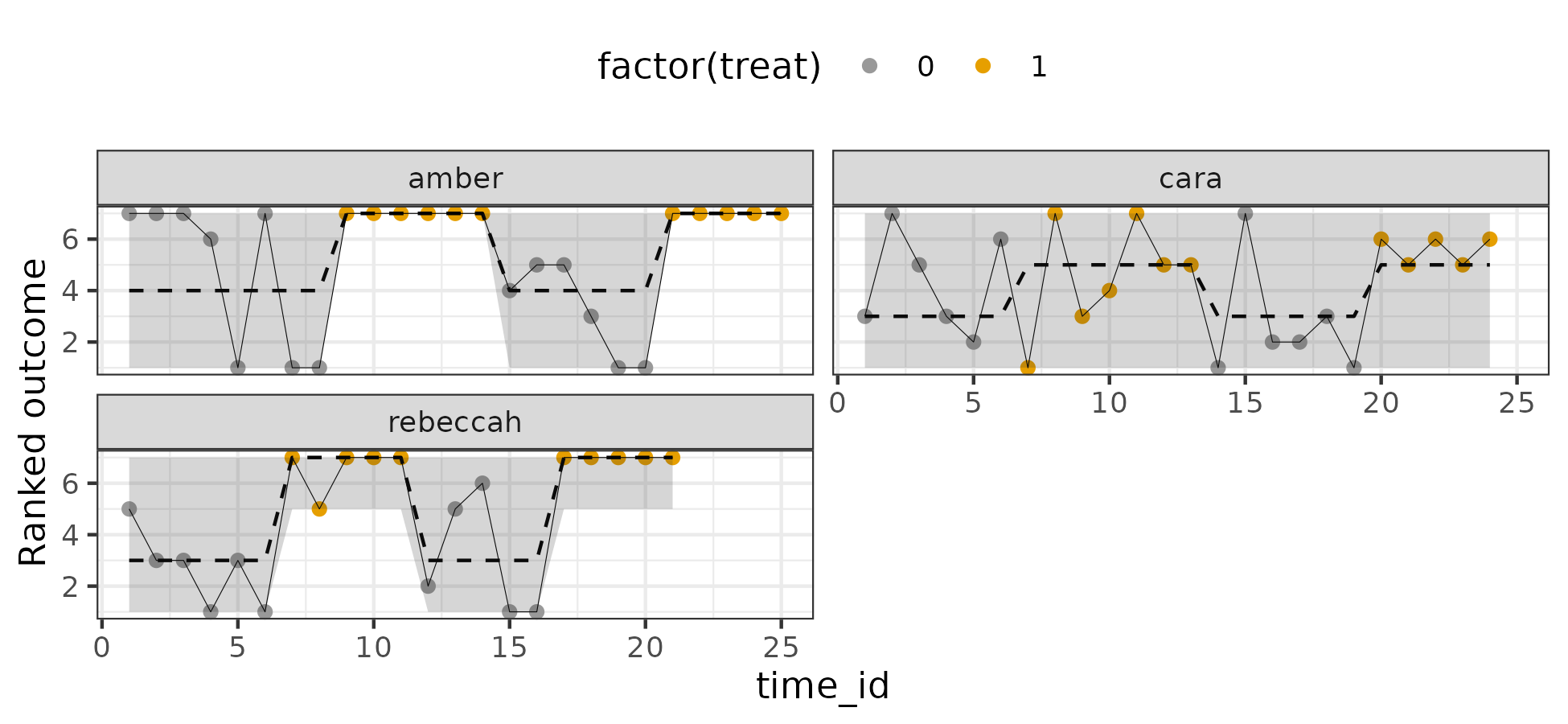

Results look acceptable. I also plotted the posterior predictive distribution against the individual trajectories on the ordinal scale (90% intervals):

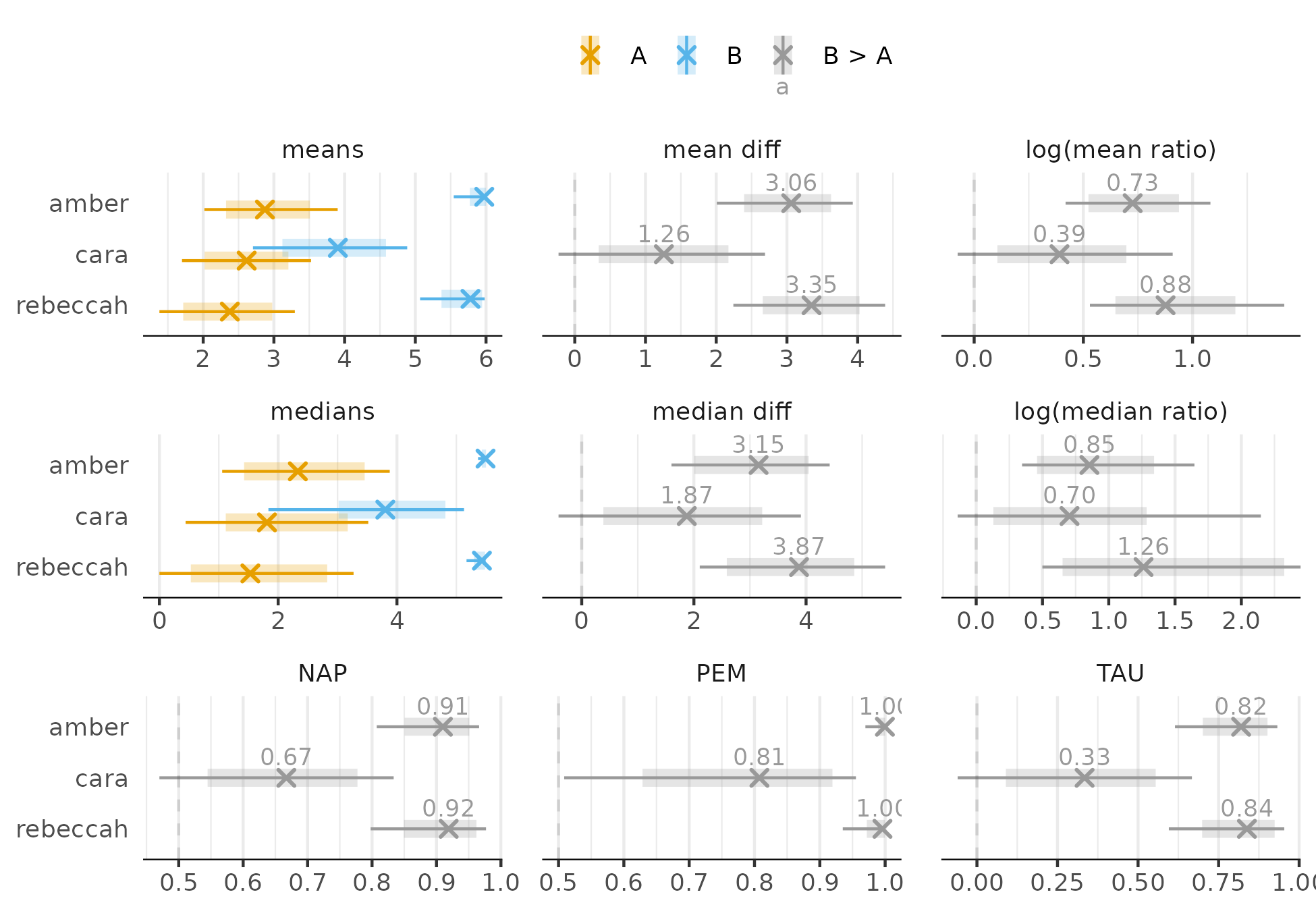

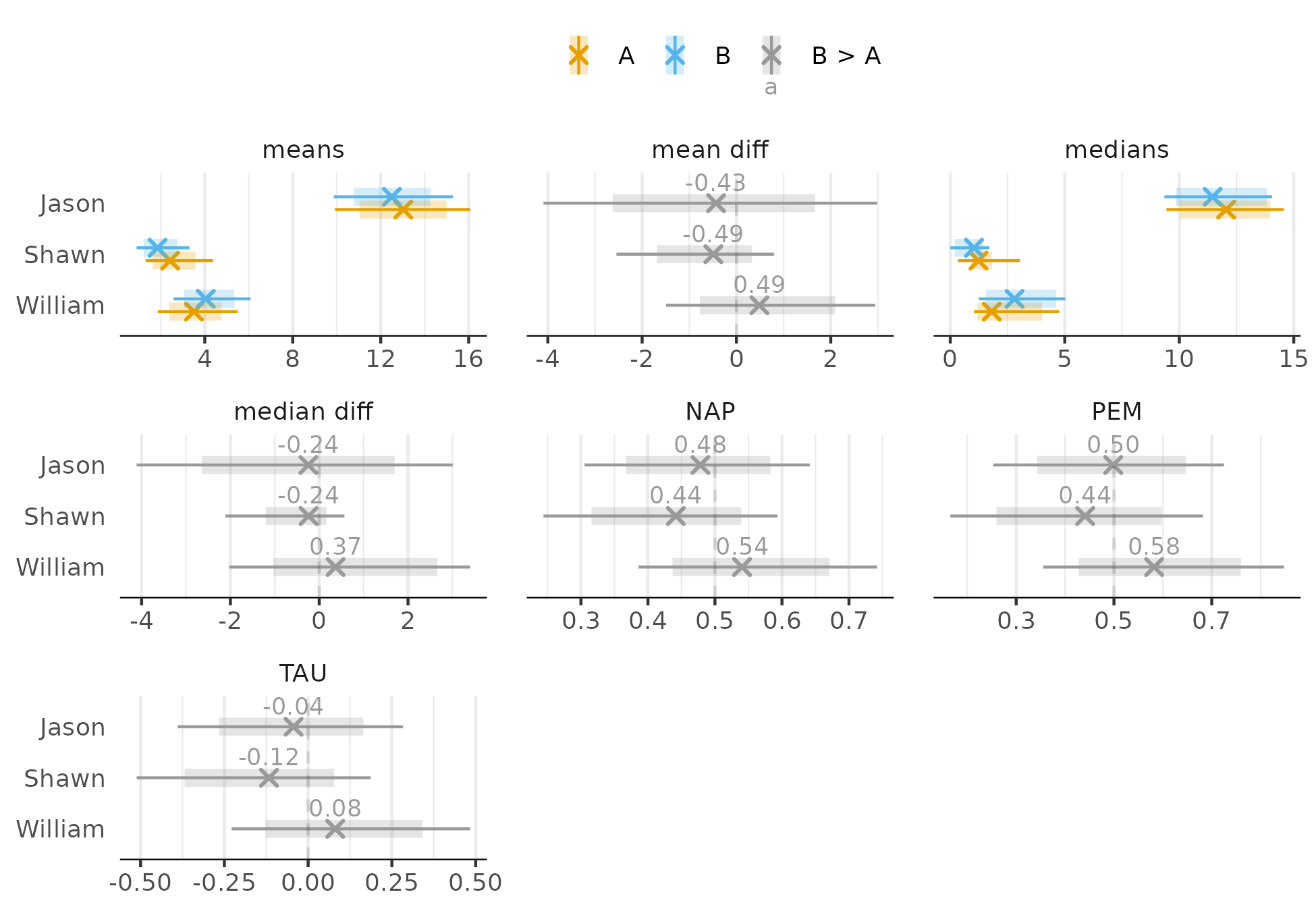

Also looks reasonable. Now for effects (intervals are 80% and 95%):

Regardless of the effect size, there is improvement for Amber and Rebeccah but the evidence is not as conclusive for Cara. Only the PEM provides clear evidence of improvement for Cara, but the uncertainty about the 81% estimate is still large.

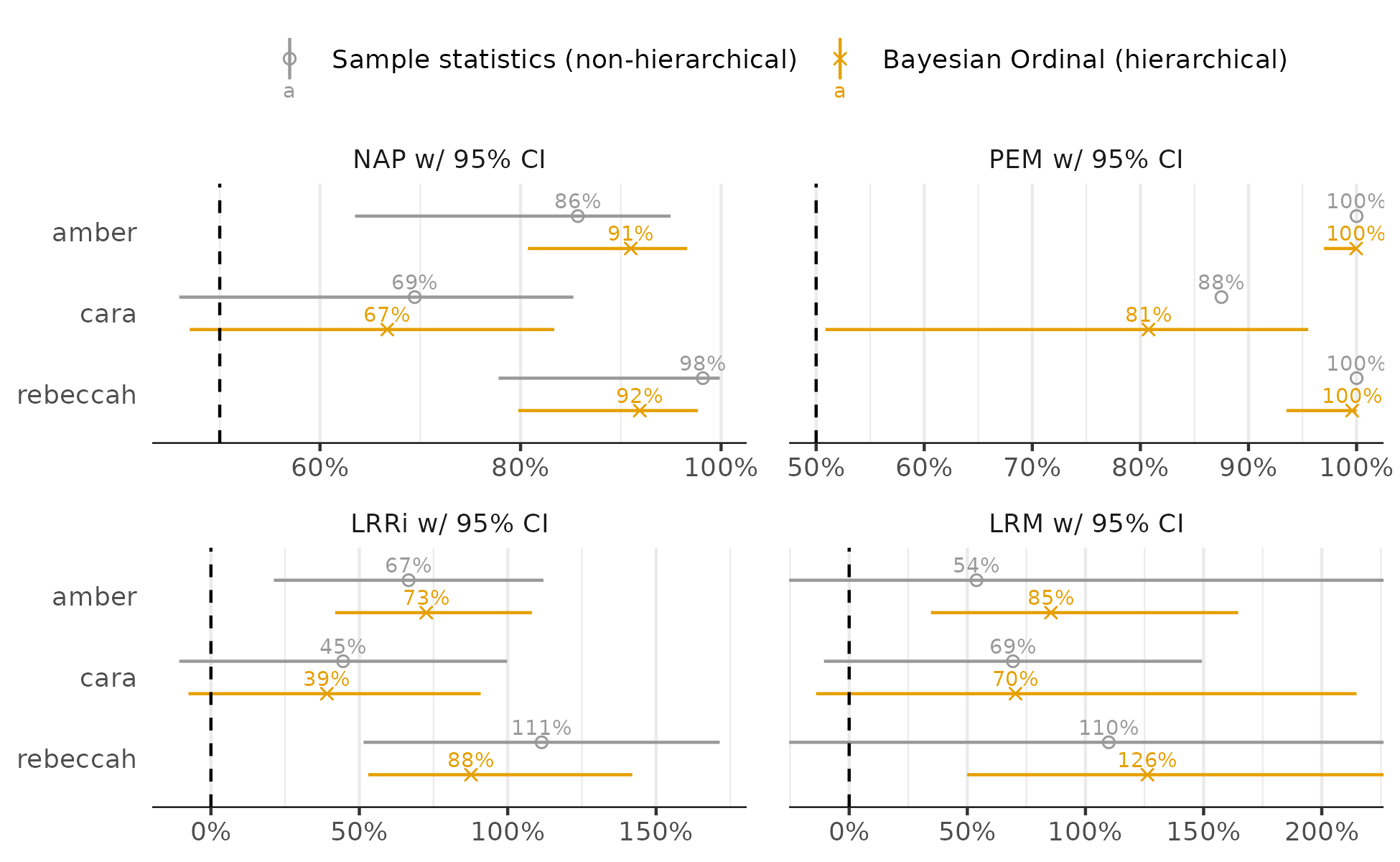

Then I compare the ordinal model effects to simple case-wise computations obtained from the SingleCaseES package (95% intervals):

The ordinal model-based effects often have less uncertainty and are often shrunken – effects of pooling and regularization. This makes the most difference for the log-ratio of medians estimates (LRM) where the improved conclusions for Amber and Rebeccah are more certain in the ordinal analysis. Note that LRRi is the log-ratio of means. Also, the sampling distribution of the PEM is not yet known, the ordinal model helps with that.

Schmidt (2012) from SingleCaseES package

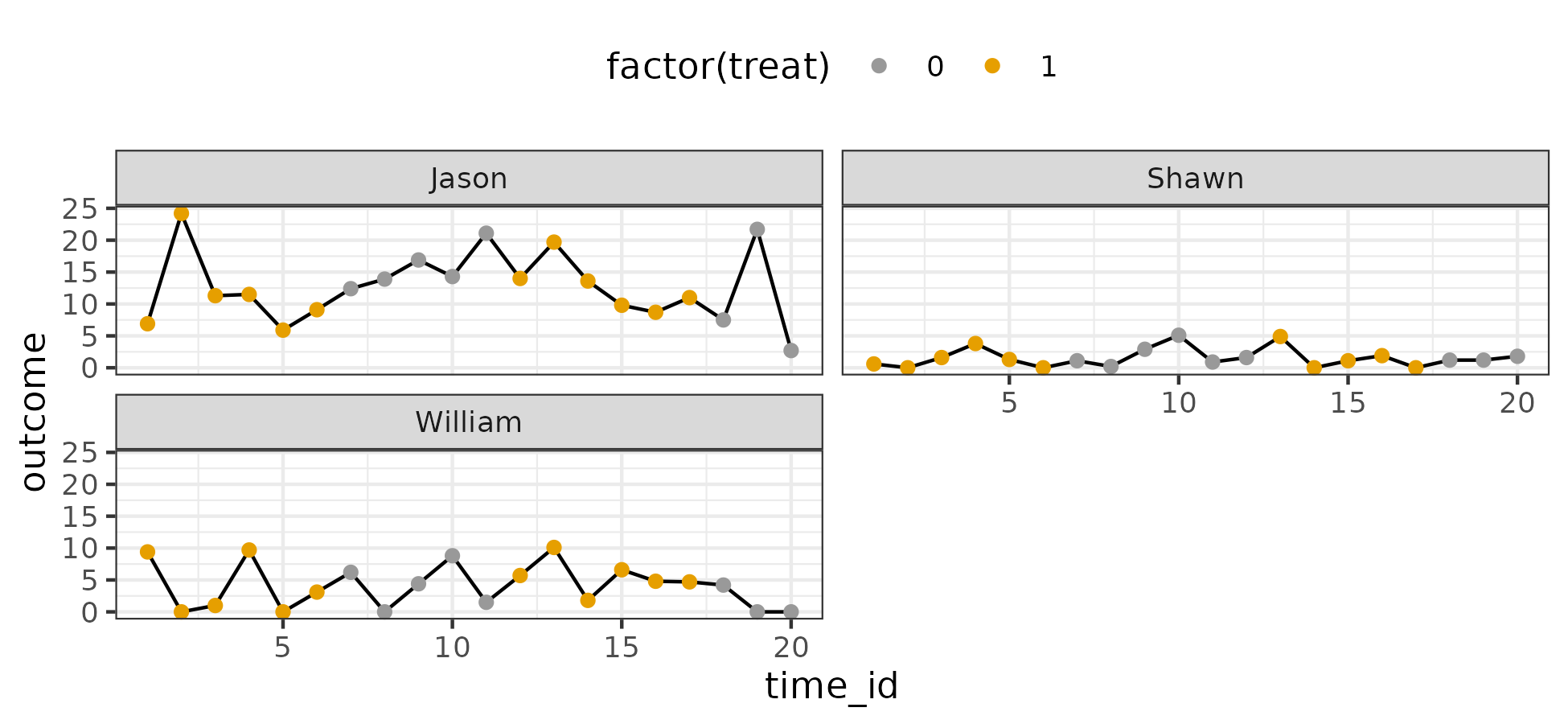

I visualize the data below:

There are three cases. The outcome is seemingly continuous and has 48 unique values. Again, higher values are desirable.

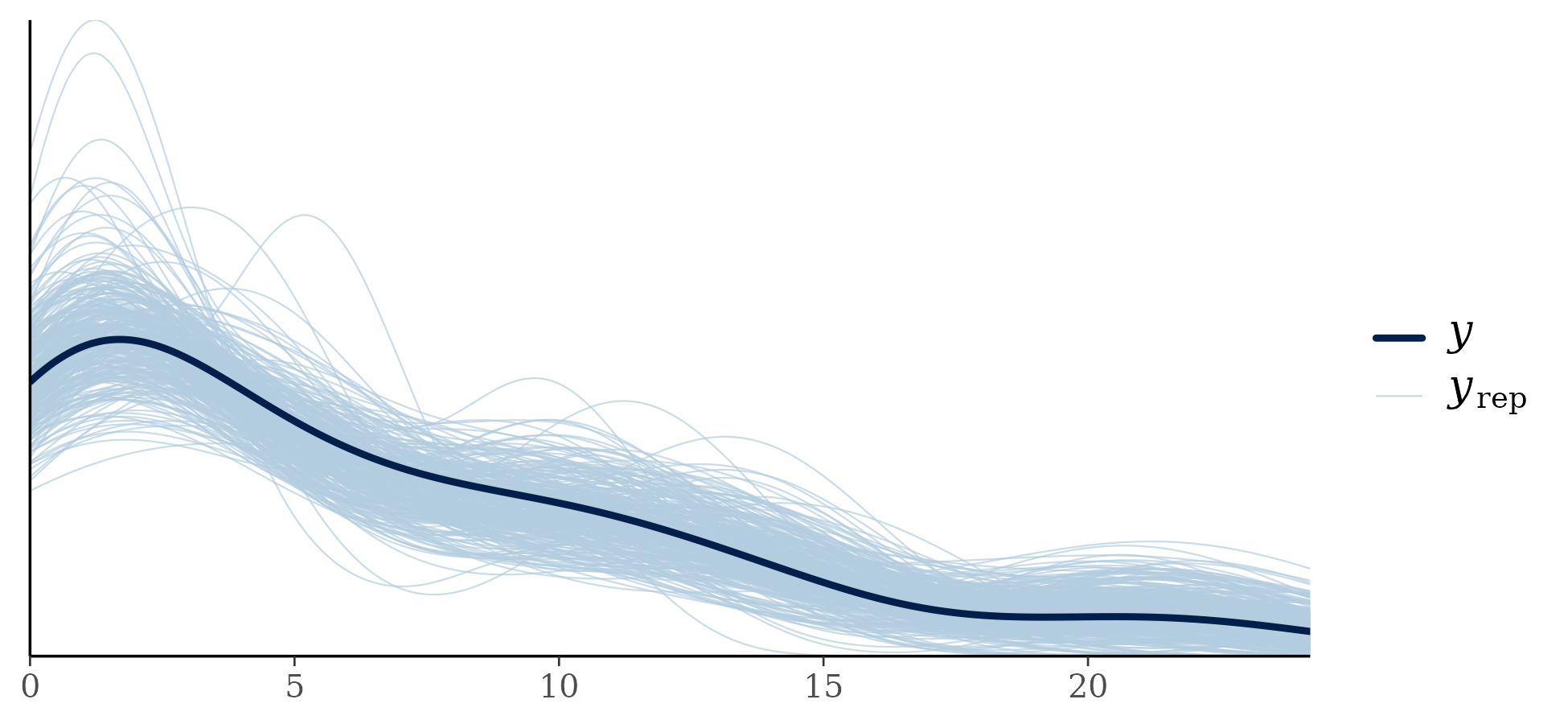

I fit the model, parameters appeared to converge without significant problems. For posterior predictive checking, I compared the density of the observed data to the density of draws from the posterior predictive distribution:

These results are on the original data scale, though they could be examined on the ordinal scale. For the original data scale, I replace the ranks in the posterior predictive distribution with the original values that were rank transformed. Results look acceptable – an advantage of the ordinal approach is that pretty much any distributional shape is realizable.

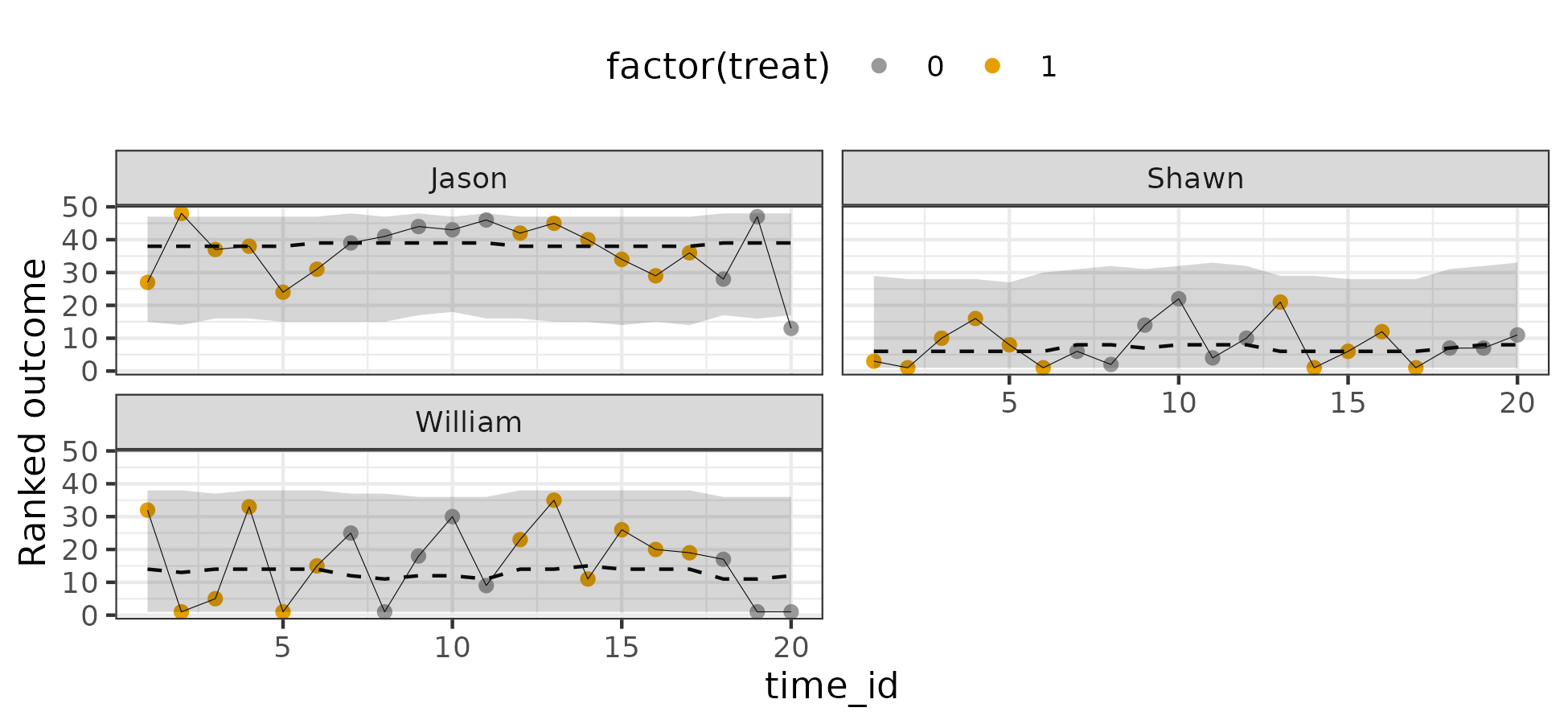

I also plotted the posterior predictive distribution against the individual trajectories on the ordinal scale (90% intervals):

We see a fairly flat pattern. This suggests the treatment hardly made a difference.

Now for effects (intervals are 80% and 95%). I exclude mean and median ratios – I think these are usually count related metrics:

None of the effects provide strong evidence for improvement.

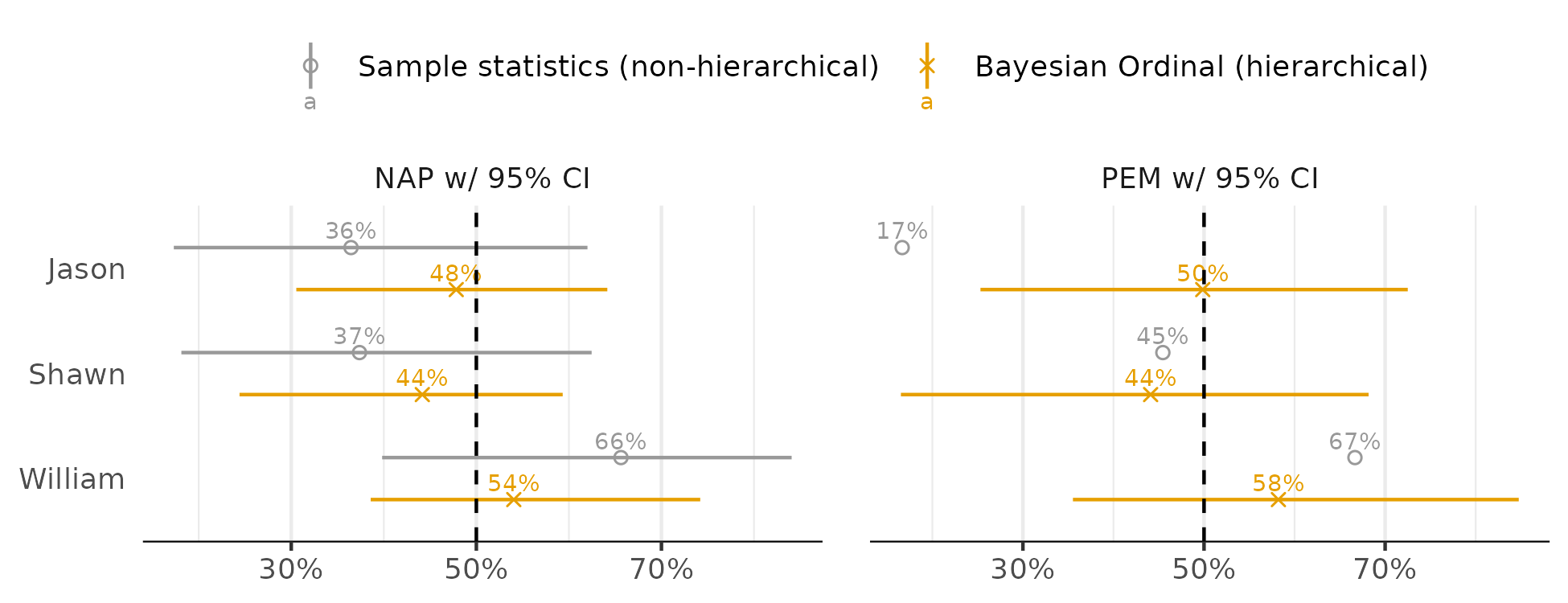

Then I compare the ordinal model effects to the standard approach (95% intervals):

We again see more stable coefficients for the ordinal approach. The most notable difference between approaches is Jason’s PEM. The standard approach returns 17% while the ordinal approach returns 50%. If you check the raw data, we can see that most of Jason’s intervention points are lower than the median of the control values. However, we would also be unlikely to conclude the intervention made things worse for Jason – the pattern looks like much fluctuation, no meaningful change. And the 17% PEM seems misleading about the overall patterns in the data.

In closing

I think ordinal regression can be pretty useful for analyzing SCD data. It’s no coincidence that several of the effect sizes used to support visual analysis of trajectories are ordinal effect sizes. The gist above contains the code to create all these plots and other plots I did not mention here. I tried to comment the scripts well enough, so I hope the gist is helpful.

Some notes about the model

- I assumed no correlation between the random intercept and random treatment effect – no strong thoughts about this but I noticed with some examples that the posterior for this parameter often just matched the prior and I didn’t want to think about it much.

- The priors are stand-in defaults, they require more thought than I’ve put into them at this stage.

- In limited trials, model runtime depends on the number of thresholds (in addition to the amount of data which is not usually much for SCD). But when using a spline to approximate the thresholds, the number of parameters can be held constant. See this gist for some examples of using splines to approximate the thresholds, though I do not account for autocorrelation in these models. Instead, I have case-specific trajectories.

- Trying to capture autocorrelation on the latent scale is the reason for the multivariate normal approach. One could instead predict the current ordinal level using the previous ordinal level, see here for examples by Frank Harrell.

-

Ordinal logistic regression for the two sample test is practically identical to the Mann-Whitney test, see: ‘‘Whitehead, J. (1993). Sample size calculations for ordered categorical data. Statistics in Medicine, 12, 2257–2271’’. You can then think of ordinal regression as a way to extend non-parametric methods to more complex scenarios than simple unadjusted comparisons. ↩︎

-

By unidimensional, I mean univariate data that reflects a single dimension. A counter example would be zero-inflated count data. Although seemingly univariate, such data reflect at least two dimensions. ↩︎

-

Heller, G. Z., Manuguerra, M., & Chow, R. (2016). How to analyze the Visual Analogue Scale: Myths, truths and clinical relevance. Scandinavian Journal of Pain, 13(1), 67–75. https://doi.org/10.1016/J.SJPAIN.2016.06.012 ↩︎

-

Liu, Q., Shepherd, B. E., Li, C., & Harrell, F. E. (2017). Modeling continuous response variables using ordinal regression. Statistics in Medicine, 36(27), 4316–4335. https://doi.org/10.1002/sim.7433 ↩︎

-

Manuguerra, M., & Heller, G. Z. (2010). Ordinal regression models for continuous scales. The International Journal of Biostatistics, 6(1). https://doi.org/10.2202/1557-4679.1230 ↩︎

-

Uanhoro, J. O., & Stone-Sabali, S. (2023). Beyond Group Mean Differences: A Demonstration With Scale Scores in Psychology. Collabra: Psychology, 9(1), 57610. https://doi.org/10.1525/collabra.57610 ↩︎

-

Albert, J., & Chib, S. (1995). Bayesian residual analysis for binary response regression models. Biometrika, 82(4), 747–759. ↩︎

-

One I cannot justify :). ↩︎

-

Tasky, K. K., Rudrud, E. H., Schulze, K. A., & Rapp, J. T. (2008). Using Choice to Increase On-Task Behavior in Individuals with Traumatic Brain Injury. Journal of Applied Behavior Analysis, 41(2), 261–265. https://doi.org/10.1901/jaba.2008.41-261 ↩︎

Comments powered by Talkyard.